Qing Luan ∗

USTC †

Hefei, China

qing 182@hotmail.com

Steven M. Drucker

Microsoft Live Labs

Redmond, WA

sdrucker@microsoft.com

Johannes Kopf

University of Konstanz

Konstanz, Germany

kopf@inf.unikonstanz.de

Ying-Qing Xu

Microsoft Research

Asia Beijing, China

yqxu@microsoft.com

Michael F. Cohen

Microsoft Research

Redmond, WA

mcohen@microsoft.com

This paper explores solutions for annotating large pictures(>1 billion pixels). Solutions have arisen on the internet to solve the problem of panning and zooming giant images, and this paper addresses the issues that arise when doing the panning and zooming.

Wednesday, May 12, 2010

HCI Remixed

Ch1 - My Vision Isn't My Vision: Making a Career Out of Getting Back to Where I Started

William Buxton

Microsoft Research, Toronto, Canada

This chapter was about a music guy who gets plugged into digital music in the 70's. Via his brother, he sits down with the device, called Mabel, located at the NRC. Not being fluent or adept at the keyboard, he didn't use it very much. Instead he used two dials to make each note. He found this to be very intuitive. He noted this as a significant achievement in CHI because it was one of the first systems to be designed with the naive user in mind, particularly music people. You did not need a computer scientist to program the notes in, but simply do what felt natural.

He proceeded to contrast that with today's systems which are not as user friendly and talks about his life's work as being to make computer systems that user friendly again.

Ch4

Drawing on SketchPad: Reflections on Computer Science and HCI

Joseph A. Konstan

University of Minnesota, Minneapolis, Minnesota, U.S.A

In this paper, the author discusses Sutherland's SketchPad program. It used a light pen to draw on a million pixel display, draw and repeat patterns, integrate constraints, link shapes, etc. He talks about how natural is is for people to be able to point at what they want to manipulate rather than using devices like a mouse. He claims the system even anticipated Object Oriented programming by using ring buffers and inheritance in the program.

He goes on to philosophize about why programmers limit themselves to what the computer already has to offer.

Ch5

cThe Mouse, the Demo, and the Big Idea

Wendy Ju

Stanford University, Stanford, California, U.S.A.

In this paper, the author talks about the effects of introducing new revolutionary concepts into HCI, specifically the introduction of the mouse. He talks about how the demo by Doug Engelbart was not initially received well at all. Even though he had invented techniques such as drag and drop with it it didn't fit with any of the predominant schools of thought at the time.

Discussion:

I feel like the mouse demo paper reminds me a lot about the introduction of color into the realm of computer displays. Color was viewed as nice but it wasn't viewed as actually enhancing productivity and was slow to being adopted by the computer world at large.

William Buxton

Microsoft Research, Toronto, Canada

This chapter was about a music guy who gets plugged into digital music in the 70's. Via his brother, he sits down with the device, called Mabel, located at the NRC. Not being fluent or adept at the keyboard, he didn't use it very much. Instead he used two dials to make each note. He found this to be very intuitive. He noted this as a significant achievement in CHI because it was one of the first systems to be designed with the naive user in mind, particularly music people. You did not need a computer scientist to program the notes in, but simply do what felt natural.

He proceeded to contrast that with today's systems which are not as user friendly and talks about his life's work as being to make computer systems that user friendly again.

Ch4

Drawing on SketchPad: Reflections on Computer Science and HCI

Joseph A. Konstan

University of Minnesota, Minneapolis, Minnesota, U.S.A

In this paper, the author discusses Sutherland's SketchPad program. It used a light pen to draw on a million pixel display, draw and repeat patterns, integrate constraints, link shapes, etc. He talks about how natural is is for people to be able to point at what they want to manipulate rather than using devices like a mouse. He claims the system even anticipated Object Oriented programming by using ring buffers and inheritance in the program.

He goes on to philosophize about why programmers limit themselves to what the computer already has to offer.

Ch5

cThe Mouse, the Demo, and the Big Idea

Wendy Ju

Stanford University, Stanford, California, U.S.A.

In this paper, the author talks about the effects of introducing new revolutionary concepts into HCI, specifically the introduction of the mouse. He talks about how the demo by Doug Engelbart was not initially received well at all. Even though he had invented techniques such as drag and drop with it it didn't fit with any of the predominant schools of thought at the time.

Discussion:

I feel like the mouse demo paper reminds me a lot about the introduction of color into the realm of computer displays. Color was viewed as nice but it wasn't viewed as actually enhancing productivity and was slow to being adopted by the computer world at large.

Thursday, April 22, 2010

Obedience to Authority

"For we know that the law is spiritual, but I am of the flesh, sold under sin. For I do not understand my own actions. For I do not do what I want, but I do the very thing I hate. Now if I do what I do not want, I agree with the law, that it is good. So now it is no longer I who do it, but sin that dwells within me. For I know that nothing good dwells in me, that is, in my flesh. For I have the desire to do what is right, but not the ability to carry it out. " - Romans 7:14-18

"If people are asked to render a moral judgment on what constitutes appropriate behavior in this situation, they unfailingly see disobedience as proper. But values are not the only forces at work in an actual, ongoing situation. They are but one narrow band of cause in the total spectrum of forces impinging on a person" - pg 6

Stanley Milgram presents an interesting case in which people in his experiments are seen to

Tuesday, March 23, 2010

The Inmates are Running the Asylum(P2)

Summary

Ch8 - An Obsolete Culture

The author criticizes the distance between visual designers and program developers in software citing that this is amajor problem for the end user. He specifically takes several shots at Microsoft, specifically citing their Explorapedia project. He feels that programmers are incapable of designing correctly because they take too many short cuts that save them time instead of focusing on the end user. Also, because of Microsoft's success, others will try to implement it.

He also cites Sagent Technology as problem riddled company. Each person in the company tends to think the general customer is like the ones they interact with. Product management people think general customers need hand holding, senior developers think general customers are seasoned vets, etc. It's like the 3 blind men and the elephant story.

He goes on to say that the mentalities of today's programmers are obsolete as well, with focus on putting little strain on the processor at expense of the user not taking into account the vast advances in technology in the last few decades.

Part IV - Interaction Design is Good Business

Ch9 - Designing for Pleasure

In his company he implements a new way of thinking. He has his teams think up personas for a typical end user. They imagine everything about the person and then design the software with them in mind calling them hypothetical archetypes. His view is that logic would tell us to design broadly to accomodate as many users as possible and that "Logic is wrong. You will have far greater success by designing for a single person." He states that software should be like cars in that there are several models of the same thing and the user picks which fits them best.

Also, we're to realize that no single user fits the "average" user definition or fits snuggly into categories of users. (ex. no one is really completely tech illiterate). Also, designing for a specific user puts an end to discussion about what features should or should not be implemented.

Projects should cater to fixed set of personas with one person being the main focus of the personas and having his needs take precedence over the others.

The case study was of Sony Trans Com's P@ssport which was an In-Flight Entertainment product that brought Video on Demand to TV's in the back of the seat in front of passengers. The interface required 6 clicks to get to a movie and then if you decided you didn't want it, it would require 6 clicks to get back up. Touch screen was an intuitive solution because the screen was at arms length but constant clicking and tapping would make the person in front of you angry with incessant tapping on the back of their head.

They created 4 passenger personas and 6 airline worker personas. The old crotchety passenger became their primary persona. As a result, a physical turn knob was instantiated that resembled a radio knob and was intuitive for Clevis.

Ch10 - Designing for Power

"The essence of good interaction design is to devise interactions that let users

achieve their practical goals without violating their personal goals."

Basically, don't make the user feel stupid. Also, don't confuse tasks and goals. If your goal is to rest, you must first finish all your homework. Goal is rest, task is homework. Goals stay the same, but tasks change with technology. Ex. getting home is the goal. In 1850 you would bring a gun and a wagon. In 2010 you get on a plane and leave the gun. For both the goal is to get home, the tasks change. Goal is the end, task is the means.

The author pushes for goal directed design over task directed design. Also, strive to satisfy personal goals before practical goals. Having the user not feel stupid and get a lot done should take precedence over providing the user with tons of features.

He differs between Personal goals(goals of the end user), Coporate goals(goals of the company), Practical goals(goals that bridge this gap), and False goals(goals that shouldn't be considered(easing software creation, saving memory, speed up data entry, etc).

Software should function somewhat like a human should toward other humans. It should be friendly and polite meaning that it should be forgiving of errors and serve when possible, demanding minimal sacrifice from the user.

Some examples are below:

Polite software is interested in me

Polite software is deferential to me

Polite software is forthcoming

Polite software has common sense

Polite software anticipates my needs

Polite software is responsive

Polite software is taciturn about its personal problems

Polite software is well informed

Polite software is perceptive

Polite software is self-confident

Polite software stays focused

Polite software is fudgable

Polite software gives instant gratification

Polite software is trustworthy

The case study for this chapter was Elemental Drumbeat, a software for creating dynamic, databased-back websites. The problem was that their target audience was composed of two completely different personas, one that was very artsy and one that was techy. As a result, the coders tended to code towards the techy guy's needs. Only a few companies had targeted the artsy type so the solution was to provide more power for the artsy type than they were familiar with, but this only meant that the artsy type would be more dependent on the techy type which was a turn off. With advances in the web, it was necessary for both to work together so the program had two interfaces, one for the artsy webmaster and one for the techy programmer.

Ch11 - Designing for People

scenario - concise description of a persona using a software-based product to achieve a goal.

Scenarios

daily use - frequently performed

necessary use - must be performed but not frequently

edge case - anomalies(can usually be ignored but a lot of times isn't)

inflecting the interface - a technique for making a program easier to use but not sacrificing functionality

- offering only the functions that are necessary when they are necessary

perpetual intermediates - most people are not beginners or experts but are perpetually in between

Part V - Getting Back into the Driver's Seat

Ch12 - Desperately Seeking Usability

Ch13 - A Managed Process

Ch14 - Power and Pleasure

Ch8 - An Obsolete Culture

The author criticizes the distance between visual designers and program developers in software citing that this is amajor problem for the end user. He specifically takes several shots at Microsoft, specifically citing their Explorapedia project. He feels that programmers are incapable of designing correctly because they take too many short cuts that save them time instead of focusing on the end user. Also, because of Microsoft's success, others will try to implement it.

He also cites Sagent Technology as problem riddled company. Each person in the company tends to think the general customer is like the ones they interact with. Product management people think general customers need hand holding, senior developers think general customers are seasoned vets, etc. It's like the 3 blind men and the elephant story.

He goes on to say that the mentalities of today's programmers are obsolete as well, with focus on putting little strain on the processor at expense of the user not taking into account the vast advances in technology in the last few decades.

Part IV - Interaction Design is Good Business

Ch9 - Designing for Pleasure

In his company he implements a new way of thinking. He has his teams think up personas for a typical end user. They imagine everything about the person and then design the software with them in mind calling them hypothetical archetypes. His view is that logic would tell us to design broadly to accomodate as many users as possible and that "Logic is wrong. You will have far greater success by designing for a single person." He states that software should be like cars in that there are several models of the same thing and the user picks which fits them best.

Also, we're to realize that no single user fits the "average" user definition or fits snuggly into categories of users. (ex. no one is really completely tech illiterate). Also, designing for a specific user puts an end to discussion about what features should or should not be implemented.

Projects should cater to fixed set of personas with one person being the main focus of the personas and having his needs take precedence over the others.

The case study was of Sony Trans Com's P@ssport which was an In-Flight Entertainment product that brought Video on Demand to TV's in the back of the seat in front of passengers. The interface required 6 clicks to get to a movie and then if you decided you didn't want it, it would require 6 clicks to get back up. Touch screen was an intuitive solution because the screen was at arms length but constant clicking and tapping would make the person in front of you angry with incessant tapping on the back of their head.

They created 4 passenger personas and 6 airline worker personas. The old crotchety passenger became their primary persona. As a result, a physical turn knob was instantiated that resembled a radio knob and was intuitive for Clevis.

Ch10 - Designing for Power

"The essence of good interaction design is to devise interactions that let users

achieve their practical goals without violating their personal goals."

Basically, don't make the user feel stupid. Also, don't confuse tasks and goals. If your goal is to rest, you must first finish all your homework. Goal is rest, task is homework. Goals stay the same, but tasks change with technology. Ex. getting home is the goal. In 1850 you would bring a gun and a wagon. In 2010 you get on a plane and leave the gun. For both the goal is to get home, the tasks change. Goal is the end, task is the means.

The author pushes for goal directed design over task directed design. Also, strive to satisfy personal goals before practical goals. Having the user not feel stupid and get a lot done should take precedence over providing the user with tons of features.

He differs between Personal goals(goals of the end user), Coporate goals(goals of the company), Practical goals(goals that bridge this gap), and False goals(goals that shouldn't be considered(easing software creation, saving memory, speed up data entry, etc).

Software should function somewhat like a human should toward other humans. It should be friendly and polite meaning that it should be forgiving of errors and serve when possible, demanding minimal sacrifice from the user.

Some examples are below:

Polite software is interested in me

Polite software is deferential to me

Polite software is forthcoming

Polite software has common sense

Polite software anticipates my needs

Polite software is responsive

Polite software is taciturn about its personal problems

Polite software is well informed

Polite software is perceptive

Polite software is self-confident

Polite software stays focused

Polite software is fudgable

Polite software gives instant gratification

Polite software is trustworthy

The case study for this chapter was Elemental Drumbeat, a software for creating dynamic, databased-back websites. The problem was that their target audience was composed of two completely different personas, one that was very artsy and one that was techy. As a result, the coders tended to code towards the techy guy's needs. Only a few companies had targeted the artsy type so the solution was to provide more power for the artsy type than they were familiar with, but this only meant that the artsy type would be more dependent on the techy type which was a turn off. With advances in the web, it was necessary for both to work together so the program had two interfaces, one for the artsy webmaster and one for the techy programmer.

Ch11 - Designing for People

scenario - concise description of a persona using a software-based product to achieve a goal.

Scenarios

daily use - frequently performed

necessary use - must be performed but not frequently

edge case - anomalies(can usually be ignored but a lot of times isn't)

inflecting the interface - a technique for making a program easier to use but not sacrificing functionality

- offering only the functions that are necessary when they are necessary

perpetual intermediates - most people are not beginners or experts but are perpetually in between

Part V - Getting Back into the Driver's Seat

Ch12 - Desperately Seeking Usability

Ch13 - A Managed Process

Ch14 - Power and Pleasure

Monday, March 22, 2010

Scratch Input: Creating Large, Inexpensive, Unpowered and Mobile Finger Input Surfaces

Authors

Participants were shown how to use the scratch input system and then participants used a program which told them which gesture to produce. Each participant repeated each gesture 5 times for a total of 30 trials per participants(15 participants).

The results are shown in the above box. The results were sufficient for a proof of concept for the use of scratch as a source of input for computers.

Discussion

At first I was incredibly skeptical about this research. I thought about how ridiculous it would be for people to scratch things in order to manipulate their computers. However, the video definitely sold me on the concept. Installing just a few of these cheap microphones around your house could transform nearly every surface into a computer input console. Every wall, desk, or even your car's dashboard or steering wheel could be used as an input device. I will say though that the success rates will probably need to be much higher for introduction into the mass market. Having a gesture wrongly categorized 1 out of every 5 or 10 times is not satisfactory for any mass distribution.

Chris Harrison Scott E. Hudson

Human-Computer Interaction Institute

Carnegie Mellon University, 5000 Forbes Ave., Pittsburgh, PA 15213

{chris.harrison, scott.hudson}@cs.cmu.edu

Summary

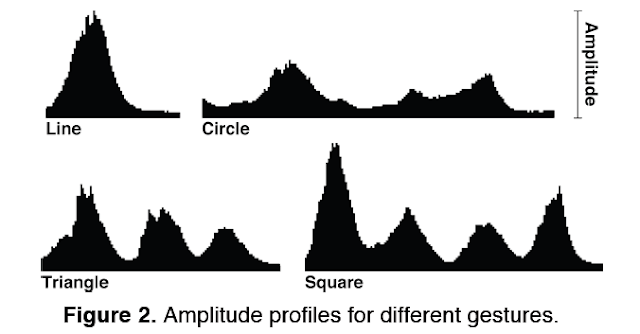

Scratch as a computer input has never really been exploited on a mass scale before. Scratching a surface such as wood, paint, or fabric produces a high frequency sound that is actually unique to the gesture that it corresponds with.

These sounds are better propagated through the material itself rather than the air. For instance, if you scratch the surface of your desk you will be able to hear it audibly with some clarity just as you would hear someone next to you talking. However, if you put your ear to the surface of the desk the sound becomes amplified and the effects of outside noise is minimal in the sound that you hear. Because sound is preserved so well in dense materials, gestures from several meters away can be accurately recognized.

Using a microphone attached to a stethoscope and a high pass filter to remove noise, the frequency graph of the sound input can be constructed and categorized using any decision making tree. These gestures can then correspond to specific actions in a computing environment such launching and exit programs, silencing music players, increasing/decreasing volume, etc. Nearly every dense surface could be turned into an input device including cell phones, tables, and walls.

Participants were shown how to use the scratch input system and then participants used a program which told them which gesture to produce. Each participant repeated each gesture 5 times for a total of 30 trials per participants(15 participants).

The results are shown in the above box. The results were sufficient for a proof of concept for the use of scratch as a source of input for computers.

Discussion

At first I was incredibly skeptical about this research. I thought about how ridiculous it would be for people to scratch things in order to manipulate their computers. However, the video definitely sold me on the concept. Installing just a few of these cheap microphones around your house could transform nearly every surface into a computer input console. Every wall, desk, or even your car's dashboard or steering wheel could be used as an input device. I will say though that the success rates will probably need to be much higher for introduction into the mass market. Having a gesture wrongly categorized 1 out of every 5 or 10 times is not satisfactory for any mass distribution.

Sunday, March 21, 2010

Predictive Text Input in a Mobile Shopping Assistant: Methods and Interface Design

Authors

Petteri Nurmi, Andreas Forsblom,

Patrik Floreen

Helsinki Institute for Information

Technology HIIT

Department of Computer Science, P.O. Box

68, FI-00014 University of Helsinki, Finland

firstname.lastname@cs.helsinki.fi

Peter Peltonen, Petri Saarikko

Helsinki Institute for Information

Technology HIIT

P.O. Box 9800, FI-02015 Helsinki

University of Technology TKK, Finland

firstname.lastname@hiit.fi

Summary

The usage of text predictions in creating shopping list was studied in this paper. They compiled an auto-generated list of 10K shopping lists to create their dictionary of suggestible words. They then implemented 8029 association rules assorted by their confidence. This alters the listing of the suggested words so that it fits the user rather than just listing them alphabetically. For instance, if the user types "cereal" and the user types the letter 'm', the program would suggest the word "milk" before listing the word "macaroni".

Petteri Nurmi, Andreas Forsblom,

Patrik Floreen

Helsinki Institute for Information

Technology HIIT

Department of Computer Science, P.O. Box

68, FI-00014 University of Helsinki, Finland

firstname.lastname@cs.helsinki.fi

Peter Peltonen, Petri Saarikko

Helsinki Institute for Information

Technology HIIT

P.O. Box 9800, FI-02015 Helsinki

University of Technology TKK, Finland

firstname.lastname@hiit.fi

Summary

The usage of text predictions in creating shopping list was studied in this paper. They compiled an auto-generated list of 10K shopping lists to create their dictionary of suggestible words. They then implemented 8029 association rules assorted by their confidence. This alters the listing of the suggested words so that it fits the user rather than just listing them alphabetically. For instance, if the user types "cereal" and the user types the letter 'm', the program would suggest the word "milk" before listing the word "macaroni".

The user study was done using mobile devices equipped with text prediction and without(the control group). Within these groups the users were also asks to complete entering their shopping lists with either both their hands or with only one hand. The participants were given candy for being in the study.

The results of the study showed that users would input words at about 5 wpm faster than without text prediction. This result was even faster for people typing with two hands.

Discussion

The user study in this paper was hilarious! They gave the participants candy? Really? Don't most studies actually pay their participants? Also, it said that one participant was removed from the study because of their "substantially high typing error rate". I don't really understand why they decided to test the difference between one handed and two handed typing. It seemed like an arbitrary test to create further useless information.

From Geek to Sleek: Integrating Task Learning Tools to Support End Users in Real-World Applications

Authors

Aaron Spaulding, Jim Blythe, Will Haines, Melinda Gervasio

Artificial Intelligence Center

SRI International

333 Ravenswood Ave.

Menlo Park, CA 94025

{spaulding, haines, gervasio}@ai.sri.com

USC Information Sciences Institute

4676 Admiralty Way

Marina del Rey, CA 90292

blythe@isi.edu

Summary

These researches combined forces to create ITL, an integrated task learning system. Basically it aims to provide a more user friendly script creation for the average end user. They wanted to make something that was not nit-picky as macro recorders as well.

They integrated their technology with two currently existing military software applications called LAPDOG and Tailor. Initial versions were disliked by users and the product was updated to adjust to their suggestions.In addition to letting users specify a particular set of scripted steps, the program let the users edit, copy, and paste scripts as well. In the end, they found that their product does in fact increase productivity because it automates repetitive tasks for the users.

Discussion

My favorite line was "this format was immediately derided as 'a bunch of geek [stuff]' by our users". I may have actually chuckled out loud a little to see those brackets in a scholarly paper. Also, considering the simplicity of the study's topic I understand why this was labeled under the "short" papers list.

Aaron Spaulding, Jim Blythe, Will Haines, Melinda Gervasio

Artificial Intelligence Center

SRI International

333 Ravenswood Ave.

Menlo Park, CA 94025

{spaulding, haines, gervasio}@ai.sri.com

USC Information Sciences Institute

4676 Admiralty Way

Marina del Rey, CA 90292

blythe@isi.edu

Summary

These researches combined forces to create ITL, an integrated task learning system. Basically it aims to provide a more user friendly script creation for the average end user. They wanted to make something that was not nit-picky as macro recorders as well.

They integrated their technology with two currently existing military software applications called LAPDOG and Tailor. Initial versions were disliked by users and the product was updated to adjust to their suggestions.In addition to letting users specify a particular set of scripted steps, the program let the users edit, copy, and paste scripts as well. In the end, they found that their product does in fact increase productivity because it automates repetitive tasks for the users.

Discussion

My favorite line was "this format was immediately derided as 'a bunch of geek [stuff]' by our users". I may have actually chuckled out loud a little to see those brackets in a scholarly paper. Also, considering the simplicity of the study's topic I understand why this was labeled under the "short" papers list.

Pulling Strings from a Tangle: Visualizing a Personal Music Listening History

Authors

Dominikus Baur

Media Informatics, University of Munich

Munich, Germany

dominikus.baur@ifi.lmu.de

Andreas Butz

Media Informatics, University of Munich

Munich, Germany

andreas.butz@ifi.lmu.de

Summary

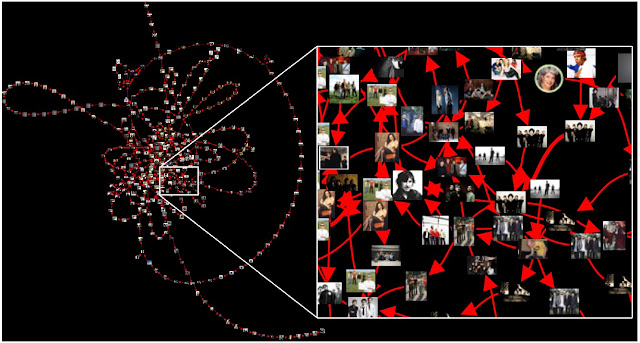

Several methods for helping music listeners create a playlist are currently implemented. Most mp3 players utilize ID3 tags which contain album, artist, year, genre, etc information. While this information allows the user to quickly utilize and find particular songs, playlist creation requires intimate knowledge of the actual music that lies the behind the ID3 tags. Others such as Pandora, have utilized machine learning techniques to categorize songs and base future suggested songs off of the machine's recommendations. Still others, such as Last.fm, study past listening histories across thousands and thousands of users and based on the similarities between those and the user's listening history suggests future songs.

To obtain the data needed for the project, they used Audioscrobbler, Last.fm's tracking software. Using this data, they created three different types of visualizations. First is the Tangle, which is a global view of the listening history. Outer loops represent anomalies or songs that were listened to without correlations to other songs. Songs that are close to other songs are relatively similar and thickness of a line represents how often a particular sequence of song was listened to.

The second visualization algorithm is strings, which shows, in a very ordered and organized way, past listening sequences.

The third visualization is called knots and it shows the user which songs are present in several strings to give the user a better visualization of which songs they most listen to.

Users can then determine paths that their music should take along with specifying a "novelty" factor which determines how unusual the path between waypoints is.

Discussion

This sounds like it would be a really cool but novel idea for the music culture. It would be equivalent to the visualizations that iTunes and Windows Media Player offer. They're really cool to look at but no one really uses it more than a couple times. I could be wrong because I haven't exactly used it and unlike those visualizations offered by Windows and Apple, Tangle actually offers some practical usage. The 1,000 song limit is a bit of a problem though.

Dominikus Baur

Media Informatics, University of Munich

Munich, Germany

dominikus.baur@ifi.lmu.de

Andreas Butz

Media Informatics, University of Munich

Munich, Germany

andreas.butz@ifi.lmu.de

Summary

Several methods for helping music listeners create a playlist are currently implemented. Most mp3 players utilize ID3 tags which contain album, artist, year, genre, etc information. While this information allows the user to quickly utilize and find particular songs, playlist creation requires intimate knowledge of the actual music that lies the behind the ID3 tags. Others such as Pandora, have utilized machine learning techniques to categorize songs and base future suggested songs off of the machine's recommendations. Still others, such as Last.fm, study past listening histories across thousands and thousands of users and based on the similarities between those and the user's listening history suggests future songs.

To obtain the data needed for the project, they used Audioscrobbler, Last.fm's tracking software. Using this data, they created three different types of visualizations. First is the Tangle, which is a global view of the listening history. Outer loops represent anomalies or songs that were listened to without correlations to other songs. Songs that are close to other songs are relatively similar and thickness of a line represents how often a particular sequence of song was listened to.

The second visualization algorithm is strings, which shows, in a very ordered and organized way, past listening sequences.

The third visualization is called knots and it shows the user which songs are present in several strings to give the user a better visualization of which songs they most listen to.

Users can then determine paths that their music should take along with specifying a "novelty" factor which determines how unusual the path between waypoints is.

Discussion

This sounds like it would be a really cool but novel idea for the music culture. It would be equivalent to the visualizations that iTunes and Windows Media Player offer. They're really cool to look at but no one really uses it more than a couple times. I could be wrong because I haven't exactly used it and unlike those visualizations offered by Windows and Apple, Tangle actually offers some practical usage. The 1,000 song limit is a bit of a problem though.

Parakeet: A Continuous Speech Recognition System for Mobile Touch-Screen Devices

Authors

Keith Vertanen and Per Ola Kristensson

Cavendish Laboratory, University of Cambridge

JJ Thomson Avenue, Cambridge, UK

{kv277, pok21}@cam.ac.uk

Summary

This paper explores the possibility of speech recognition as a way to input text on a touchscreen device. Speech recognition has generally been blocked from entering the mobile phone arena because of the processor resources required to effectively translate from speech to text. Not only does it normally require far more resources than the processor can spare, the result is usually very erroneous (ie Google Voice voicemail transcriptions). However, despite the setbacks speech to text is very attractive as a text input method because users are already familiar with speaking so no training is involved and users can typically speak up to 200 wpm which far surpasses even the best typists (especially mobile typists).

The researchers built Parakeet to address this issue. When designing it they followed 4 design principles. First, they were to avoid cascading errors involving phase offset. They did this by using a multi-modal speech interface. Second, they needed to exploit the speech recognition hypothesis space. They did this by offering the user several choices from several recognition hypotheses. Third, they must implement efficient and practical interaction by touch. They did this by implementing an interface that only required touch so that it could be used with or without a stylus. Lastly, the design should support fragmented interaction. In other words, the device should anticipate the ADD tendencies of its user. They did this by offering text predictions and alerting the user audibly that their speech is done translating to text.

Users used the device both while seated indoors and walking outdoors to test the effectiveness and mobility of their product. They were given a fixed set of sentences between 8 and 16 words in length to say in 30 minute trials. Once they said a sentence they were to try and correct the sentence using Parakeet's correction interface before moving on to the next sentence. On average, participants completed 41 sentences inside and 27 sentences outside during the 30 minute trials.

Results indicated that participants managed an average of 18 wpm indoors and 13 wpm outdoors while utilizing correction.

Discussion

I don't really know what the average typing speed of phone texters is but I would imagine that 18wpm is a little on the slow side for texters, especially those that text frequently. Obviously, this rate would be vastly improved if the translation algorithm was more accurate, but even the almighty Google is far from achieving that feat.

Keith Vertanen and Per Ola Kristensson

Cavendish Laboratory, University of Cambridge

JJ Thomson Avenue, Cambridge, UK

{kv277, pok21}@cam.ac.uk

Summary

This paper explores the possibility of speech recognition as a way to input text on a touchscreen device. Speech recognition has generally been blocked from entering the mobile phone arena because of the processor resources required to effectively translate from speech to text. Not only does it normally require far more resources than the processor can spare, the result is usually very erroneous (ie Google Voice voicemail transcriptions). However, despite the setbacks speech to text is very attractive as a text input method because users are already familiar with speaking so no training is involved and users can typically speak up to 200 wpm which far surpasses even the best typists (especially mobile typists).

The researchers built Parakeet to address this issue. When designing it they followed 4 design principles. First, they were to avoid cascading errors involving phase offset. They did this by using a multi-modal speech interface. Second, they needed to exploit the speech recognition hypothesis space. They did this by offering the user several choices from several recognition hypotheses. Third, they must implement efficient and practical interaction by touch. They did this by implementing an interface that only required touch so that it could be used with or without a stylus. Lastly, the design should support fragmented interaction. In other words, the device should anticipate the ADD tendencies of its user. They did this by offering text predictions and alerting the user audibly that their speech is done translating to text.

Users used the device both while seated indoors and walking outdoors to test the effectiveness and mobility of their product. They were given a fixed set of sentences between 8 and 16 words in length to say in 30 minute trials. Once they said a sentence they were to try and correct the sentence using Parakeet's correction interface before moving on to the next sentence. On average, participants completed 41 sentences inside and 27 sentences outside during the 30 minute trials.

Results indicated that participants managed an average of 18 wpm indoors and 13 wpm outdoors while utilizing correction.

Discussion

I don't really know what the average typing speed of phone texters is but I would imagine that 18wpm is a little on the slow side for texters, especially those that text frequently. Obviously, this rate would be vastly improved if the translation algorithm was more accurate, but even the almighty Google is far from achieving that feat.

“My Dating Site Thinks I’m a Loser”: Effects of Personal Photos and Presentation Intervals on Perceptions of Recommender Systems

Authors

Shailendra Rao

Stanford University

shailo@stanford.edu

Tom Hurlbutt

Stanford University/ Intuit

hurlbutt@cs.stanford.edu

Clifford Nass

Stanford University

nass@stanford.edu

Nundu JanakiRam

Stanford University/ Google

nundu@google.com

Summary

This study explores the effects that bad recommendations from recommender systems can have on the individuals that use those systems. For example, if someone's TiVo mistakenly thinks that they are gay and recommends television programs that would appeal to those that are gay, then the user might erroneously watch more "guy" programming to compensate for their miscategorization.

The situation that is explored in this paper is online dating. People will lie about themselves, omit information about themselves, or submit misleading pictures of themselves to present their "ideal" self. Giving false information like this only skews the system and matches users with people who differ substantially from what they were expecting. This leads to frustration for both parties involved and now the dating site cannot effectively carry out its purpose or end goal.

The study used a new algorithm called MetaMatch, which took the answers to a set of personal questions and used them to purposefully suggest undesirable matches. After receiving the matches, the participants rated their recommendations and answered a post questionnaire.

During the questionnaire, half of the participants had their submitted picture posted next to the questions as they answered them and the other half had no picture. Also, half of the participants were shown 4 possible (undesirable) matches after each set of 10 questions and the other half were only shown matches after they finished the entire questionnaire.

The results of the post questionnaire are represented in the graphs above. The study found that displaying a personal photo can have a stabilizing effect amongst participants. Having an element of self-reflection during the questionnaire tended to make the participants not change their answers to the questions to compensate for their bad matches. Also, intermediate recommendations tended to make the user change how much they approved of the bad match at the end of the questions.

Discussion

I thought this study was one of those studies that proves something that you already suspect. It would be interesting to see what online dating companies do with this information or with more indepth studies. I do feel like only testing 56 people was a bit small for the user study though. I would like to have seen a more exhaustive study that included thousands of participants.

Shailendra Rao

Stanford University

shailo@stanford.edu

Tom Hurlbutt

Stanford University/ Intuit

hurlbutt@cs.stanford.edu

Clifford Nass

Stanford University

nass@stanford.edu

Nundu JanakiRam

Stanford University/ Google

nundu@google.com

Summary

This study explores the effects that bad recommendations from recommender systems can have on the individuals that use those systems. For example, if someone's TiVo mistakenly thinks that they are gay and recommends television programs that would appeal to those that are gay, then the user might erroneously watch more "guy" programming to compensate for their miscategorization.

The situation that is explored in this paper is online dating. People will lie about themselves, omit information about themselves, or submit misleading pictures of themselves to present their "ideal" self. Giving false information like this only skews the system and matches users with people who differ substantially from what they were expecting. This leads to frustration for both parties involved and now the dating site cannot effectively carry out its purpose or end goal.

The study used a new algorithm called MetaMatch, which took the answers to a set of personal questions and used them to purposefully suggest undesirable matches. After receiving the matches, the participants rated their recommendations and answered a post questionnaire.

During the questionnaire, half of the participants had their submitted picture posted next to the questions as they answered them and the other half had no picture. Also, half of the participants were shown 4 possible (undesirable) matches after each set of 10 questions and the other half were only shown matches after they finished the entire questionnaire.

The results of the post questionnaire are represented in the graphs above. The study found that displaying a personal photo can have a stabilizing effect amongst participants. Having an element of self-reflection during the questionnaire tended to make the participants not change their answers to the questions to compensate for their bad matches. Also, intermediate recommendations tended to make the user change how much they approved of the bad match at the end of the questions.

Discussion

I thought this study was one of those studies that proves something that you already suspect. It would be interesting to see what online dating companies do with this information or with more indepth studies. I do feel like only testing 56 people was a bit small for the user study though. I would like to have seen a more exhaustive study that included thousands of participants.

The TeeBoard: an Education-Friendly Construction Platform for E-Textiles and Wearable Computing

Authors

Grace Ngai, Stephen C.F. Chan, Joey C.Y. Cheung, Winnie W.Y. Lau

{ csgngai, csschan, cscycheung, cswylau }@comp.polyu.edu.hk

Department of Computing

Hong Kong Polytechnic University

Kowloon, Hong Kong

Summary

Wearable computing and e-textiles is an emerging field in contemporary fashion. However, existing technology is so complex that it is neither affordable or feasible for the average hobbyist or consumer. The solution for this problem, presented by the above researchers, is the TeeBoard, a constructive platform that aims to "lower the floor" for e-textiles and explores the possibility of integrating the technology into the education field as a teachable tool.

For e-textile technology to be more widely adopted as an educational tool it should be robust for everyday use and rough handling, it should have large error tolerance, and a shallow learning curve. The current state of e-textile technology does not yet satisfy these requirements.

The researchers set out several goals for their project in addition to solving the above listed problems: 1) capabilities of the materials used should be as close to their electrical equivalents as possible, 2) the construction platform should be able to be used by users of all skill levels, and 3) the platform should be easily reconfigurable and debuggable.

The TeeBoard was the solution they came up with, a wearable breadboard that could easily configured to the user's wishes. The shirt uses a Arduino Lilypad microcontroller for processing of signals and a conductive thread instead of wires to achieve the robustness necessary for everyday wear and tear.

The shirts were used with several test groups. Groups were given simple tasks and then incrementally harder tasks to test the effectiveness of the product. The results showed that the TeeBoard did in fact meet its requirements and students were more interested in computational and electronic designs.

Discussion

This is an interesting concept for more interactive applications of electronic theory. I think the main problem with the A&M program is that application is not stressed in a tactile way. Granted we do use breadboards and circuits to illustrate concepts, but rarely are the concepts illustrated in a way that it would be used in the real world. I would love to see more interactive solutions such as the one in this paper in the educational system.

The only problem I saw with the user study was that it was tested amongst college classes that already had significant background in electronic theory. I would have liked to see them test this product amongst high school settings where students are not as familiar with electronic theory.

Grace Ngai, Stephen C.F. Chan, Joey C.Y. Cheung, Winnie W.Y. Lau

{ csgngai, csschan, cscycheung, cswylau }@comp.polyu.edu.hk

Department of Computing

Hong Kong Polytechnic University

Kowloon, Hong Kong

Summary

Wearable computing and e-textiles is an emerging field in contemporary fashion. However, existing technology is so complex that it is neither affordable or feasible for the average hobbyist or consumer. The solution for this problem, presented by the above researchers, is the TeeBoard, a constructive platform that aims to "lower the floor" for e-textiles and explores the possibility of integrating the technology into the education field as a teachable tool.

For e-textile technology to be more widely adopted as an educational tool it should be robust for everyday use and rough handling, it should have large error tolerance, and a shallow learning curve. The current state of e-textile technology does not yet satisfy these requirements.

The researchers set out several goals for their project in addition to solving the above listed problems: 1) capabilities of the materials used should be as close to their electrical equivalents as possible, 2) the construction platform should be able to be used by users of all skill levels, and 3) the platform should be easily reconfigurable and debuggable.

The TeeBoard was the solution they came up with, a wearable breadboard that could easily configured to the user's wishes. The shirt uses a Arduino Lilypad microcontroller for processing of signals and a conductive thread instead of wires to achieve the robustness necessary for everyday wear and tear.

The shirts were used with several test groups. Groups were given simple tasks and then incrementally harder tasks to test the effectiveness of the product. The results showed that the TeeBoard did in fact meet its requirements and students were more interested in computational and electronic designs.

Discussion

This is an interesting concept for more interactive applications of electronic theory. I think the main problem with the A&M program is that application is not stressed in a tactile way. Granted we do use breadboards and circuits to illustrate concepts, but rarely are the concepts illustrated in a way that it would be used in the real world. I would love to see more interactive solutions such as the one in this paper in the educational system.

The only problem I saw with the user study was that it was tested amongst college classes that already had significant background in electronic theory. I would have liked to see them test this product amongst high school settings where students are not as familiar with electronic theory.

PrintMarmoset: Redesigning the Print Button for Sustainability

Authors

Jun Xiao

Hewlett-Packard Laboratories

Palo Alto, CA USA

jun.xiao2@hp.com

Jian Fan

Hewlett-Packard Laboratories

Palo Alto, CA USA

jian.fan@hp.com

Summary

This paper focuses on Sustainable Interaction Design, design which aims to alleviate the problems associated with dwindling natural resources by shifting from production of new products to production of sustainable growth products. Specifically, they focus on redesigning the print button to minimize erroneous prints and other various paper wastes and in turn, promote sustainability.

Users in the study were studied to reveal what and how often they printed and the results were critically examined to draw conclusions. The found that users printed information that was digitally available because it saved time(in the case of printing a map vs using a GPS), it was easier to user(in the case of printing a pdf instead of viewing it on screen), it signified importance(in the case of printing a website instead of emailing a link), and the information was volatile and needed to be preserved(in the case of news articles being constantly updated). These cases were generalized to say that printing served four main functions: display, delivery, sharing, and storage.

While this paper did not aim to resolve these problems, it did attempt to minimize the problem of erroneous or unnecessary prints. People reported printing out webcontent because it was necessary but only to find their printout riddled with web advertisements or malformatting which rendered the printout useless and therefore thrown away as waste.

Their goal was to create a solution that required 1) require neither developers to modify existing web sites nor users to change existing print flow; 2) require the least amount of user input effort, if not zero; 3) offer flexibility that allows users to choose what to print in addition to pre-defined filter or template; 4) maintain a history of print activities for collecting and organizing web clips; 5) raise awareness of the tool among people and build a “green” community around it.

The resulting solution was PrintMarmoset, a Firefox extension that allowed the user to better select what needed to be printed. The user would click the icon for the extension, "stroke" through webcontent that was unnecessary, and then click the icon again to print what was selected.

People commented that PrintMarmoset is WYSIWYG and the UI was easy to use. The authors commented that the tool did not satisfy all users but that that was an impossible task to accomplish.

Discussion

This seems like an awesome idea to be honest. Many times have I wanted to print a website only to see that the advertisements have completely ruined the layout of the print or have offset the layout to produced unneeded blank pages with just the website printed at the bottom. These pages are then thrown away as a wasted print and paper is subsequently unnecessarily wasted. I'll have to try this extension out to see just how user friendly it is, but the concept itself seems hopeful.

Jun Xiao

Hewlett-Packard Laboratories

Palo Alto, CA USA

jun.xiao2@hp.com

Jian Fan

Hewlett-Packard Laboratories

Palo Alto, CA USA

jian.fan@hp.com

Summary

This paper focuses on Sustainable Interaction Design, design which aims to alleviate the problems associated with dwindling natural resources by shifting from production of new products to production of sustainable growth products. Specifically, they focus on redesigning the print button to minimize erroneous prints and other various paper wastes and in turn, promote sustainability.

Users in the study were studied to reveal what and how often they printed and the results were critically examined to draw conclusions. The found that users printed information that was digitally available because it saved time(in the case of printing a map vs using a GPS), it was easier to user(in the case of printing a pdf instead of viewing it on screen), it signified importance(in the case of printing a website instead of emailing a link), and the information was volatile and needed to be preserved(in the case of news articles being constantly updated). These cases were generalized to say that printing served four main functions: display, delivery, sharing, and storage.

While this paper did not aim to resolve these problems, it did attempt to minimize the problem of erroneous or unnecessary prints. People reported printing out webcontent because it was necessary but only to find their printout riddled with web advertisements or malformatting which rendered the printout useless and therefore thrown away as waste.

Their goal was to create a solution that required 1) require neither developers to modify existing web sites nor users to change existing print flow; 2) require the least amount of user input effort, if not zero; 3) offer flexibility that allows users to choose what to print in addition to pre-defined filter or template; 4) maintain a history of print activities for collecting and organizing web clips; 5) raise awareness of the tool among people and build a “green” community around it.

The resulting solution was PrintMarmoset, a Firefox extension that allowed the user to better select what needed to be printed. The user would click the icon for the extension, "stroke" through webcontent that was unnecessary, and then click the icon again to print what was selected.

People commented that PrintMarmoset is WYSIWYG and the UI was easy to use. The authors commented that the tool did not satisfy all users but that that was an impossible task to accomplish.

Discussion

This seems like an awesome idea to be honest. Many times have I wanted to print a website only to see that the advertisements have completely ruined the layout of the print or have offset the layout to produced unneeded blank pages with just the website printed at the bottom. These pages are then thrown away as a wasted print and paper is subsequently unnecessarily wasted. I'll have to try this extension out to see just how user friendly it is, but the concept itself seems hopeful.

Tuesday, March 2, 2010

Emotional Design

"The problem is that we still let logic make decisions for us"

Sentences like this in the prologue of the book kind of scared me going into it. However, I did enjoy this book more than his previous work. It gave more insight into why certain technologies succeed over others despite offering the same or even less capabilities than their competition. I also enjoyed how spelled out the processes of what was happening when a person is introduced to a new product.

Honestly, I left reading the book with one desire in mind: I'd love to visit this guy's house. He kept referencing all the little things he has in his house and i think it would be so cool to just get to mess with it all one day.

Sentences like this in the prologue of the book kind of scared me going into it. However, I did enjoy this book more than his previous work. It gave more insight into why certain technologies succeed over others despite offering the same or even less capabilities than their competition. I also enjoyed how spelled out the processes of what was happening when a person is introduced to a new product.

Honestly, I left reading the book with one desire in mind: I'd love to visit this guy's house. He kept referencing all the little things he has in his house and i think it would be so cool to just get to mess with it all one day.

Tuesday, February 16, 2010

The Inmates are Running the Asylum(Part 1)

I found it ironic that the author criticized the programmer for making software that was suited or customized for him and yet a major part of the book was about things that were not intuitive for the author. In other words, the author assumed that was he wanted as an end user was what everyone else wanted, but what the programmer wanted in a software couldn't possibly be what everyone wanted.

To be honest, it seemed like the first two chapters were complaints from a guy who can't figure out how to work anything electronic in his life, but I do like how he stressed the importance of planning for user interaction at the beginning and having that be a key to a product's marketability.

It was also kind of cool to get a better understanding of what exactly creates user loyalty. I think this is why people will stick with things like iTunes or Google's search engine. Users feel no need to explore other options because they feel like the original met their needs precisely both in capability and desirability.

To be honest, it seemed like the first two chapters were complaints from a guy who can't figure out how to work anything electronic in his life, but I do like how he stressed the importance of planning for user interaction at the beginning and having that be a key to a product's marketability.

It was also kind of cool to get a better understanding of what exactly creates user loyalty. I think this is why people will stick with things like iTunes or Google's search engine. Users feel no need to explore other options because they feel like the original met their needs precisely both in capability and desirability.

Tuesday, February 9, 2010

HeartBeat: An Outdoor Pervasive Game for Children

by

Remco Magielse

Panos Markopoulous

Eindhoven University of Technology

Heartbeat is an experiment into how sensing the heart rate of children could promote outdoor activity, specifically outdoor augmented reality games called "pervasive games". Pervasive games are games that use technology such as wifi or gps to augment reality such as the game Human Pacman.

Unfortunately, games like this require the user to be "head down" which mean facing down to look at the technological device which can lead to decreased engagement with others. This is not preferable for the development of children and not a perfect solution to the rise of video game consoles, which restrain the children to be in a single room.

Heartbeat is considered a "heads up" game that requires little visual attention from children and allows them to interact more with their environment and peers.

The game is a variation of capture the flag with two teams: the attackers and defenders. Children hide at the beginning of the game and then all hand held devices are broadcast with a randomized role distribution. Each of the attackers try to tag the defending team's device to transfer them over to the attacking team. One defender is given a special treasure to protect during the game.

The game was played at a local park and in a local forest near the school the kids were from. Games were limited to 4 minutes. If the defending team did not get caught during that time then they won.

The actual prototype for the game was a device strapped around the chest to measure heart rate. When the players heart rate passed the threshold heart rate(in this case 100bpm) the player's device would beep at a rate consistent with the player's heart rate alerting others to their presence. They ran two different trials, one with where the team member's hardware would beep every 1.5 seconds and one where the team member's hardware would beep in sync with their heartbeat.

Overall it seemed that children did not show any significant difference in physical activity. They stated that there was no substantial increase in physical activity with or with out the heart beat sensor. Also, the children had mixed reviews on whether or not they appreciated the beeping sound aspect of the game.

Paper

Discussion:

I feel like the game was not designed well to incorporate heart rate sensing into the design. To be honest, it seemed like the aspects that the authors said went well about the experiment seemed to be aspects of a normal outdoor game and not about the augmented aspect of the pervasive game.

Remco Magielse

Panos Markopoulous

Eindhoven University of Technology

Heartbeat is an experiment into how sensing the heart rate of children could promote outdoor activity, specifically outdoor augmented reality games called "pervasive games". Pervasive games are games that use technology such as wifi or gps to augment reality such as the game Human Pacman.

Unfortunately, games like this require the user to be "head down" which mean facing down to look at the technological device which can lead to decreased engagement with others. This is not preferable for the development of children and not a perfect solution to the rise of video game consoles, which restrain the children to be in a single room.

Heartbeat is considered a "heads up" game that requires little visual attention from children and allows them to interact more with their environment and peers.

The game is a variation of capture the flag with two teams: the attackers and defenders. Children hide at the beginning of the game and then all hand held devices are broadcast with a randomized role distribution. Each of the attackers try to tag the defending team's device to transfer them over to the attacking team. One defender is given a special treasure to protect during the game.

The game was played at a local park and in a local forest near the school the kids were from. Games were limited to 4 minutes. If the defending team did not get caught during that time then they won.

The actual prototype for the game was a device strapped around the chest to measure heart rate. When the players heart rate passed the threshold heart rate(in this case 100bpm) the player's device would beep at a rate consistent with the player's heart rate alerting others to their presence. They ran two different trials, one with where the team member's hardware would beep every 1.5 seconds and one where the team member's hardware would beep in sync with their heartbeat.

Overall it seemed that children did not show any significant difference in physical activity. They stated that there was no substantial increase in physical activity with or with out the heart beat sensor. Also, the children had mixed reviews on whether or not they appreciated the beeping sound aspect of the game.

Paper

Discussion:

I feel like the game was not designed well to incorporate heart rate sensing into the design. To be honest, it seemed like the aspects that the authors said went well about the experiment seemed to be aspects of a normal outdoor game and not about the augmented aspect of the pervasive game.

The Voicebot: A Voice Controlled Robot Arm

Authors: Brandi House, Jonathan Malkin, Jeff Bilmes

Summary: In the past, those that were severely physically impaired had few options for assistance in day to day activities. Normally they would have to rely on the assistance of others, but this introduces an inconvenient dependency on others. Voicebot seeks to improve the independence of individuals with severe motor impairment by allowing them to use their voice to control a robotic arm.

Joint Movement Studies

Three methods were used to control the arm: the Forward Kinematic Model, the Inverse Kinematic Model, and the Hybrid Model. The FK model had the user control each joint one at a time. This was really easy to implement because user input is directly sent to the robotic arm. The IK model has the user just control the gripper(called the effector) and the joints move accordingly. The Hybrid model, however, uses IK to move the shoulder and elbow and then FK to move the wrist. Since any point can be reached using only two of the three joints, they had the shoulder and elbow joint move using IK and the wrist joint move using FK.

A test was done to move a ball using a simulated 2D arm to 4 sequential locations. Apparently 3/5 of the users did better with IK and 2/5 of the users did better with FK. None seemed to prefer the Hybrid model.

The findings were extended to a 3D robotic arm. Users would use the "ck" sound to switch between Position mode(gross detail) and Orientation mode(fine detail). The "ch" sound closed or opened the gripper. The robotic arm had 5 degrees of freedom(shoulder rotation,shoulder bend, elbow bend, wrist rotate, and wrist

bend.) Only three can be changed at a time so movement was separated into Position mode(gross detail) and Orientation mode(fine detail). In Position Mode the shoulder movements and elbow bend were activated and in Orientation mode the wrist was activated.

This time the arm was tested with users unfamiliar with the device. They were taught how to control it and the results are as follows:

All the users were able to finish but several complained about how difficult it was. Changing by pitch was significantly difficult and there were several false positives for the "ch" or "ck" sound.

paper

Discussion:

I guess this seems like a cool novel idea, but it seems like it still has a long way to go to be practical. As I was reading it seemed like there would be better ways to emulate arm movement that through vocal stimulation of a robotic arm.

Thursday, February 4, 2010

Perceptual Interpretation of Ink Annotations on Line Charts

This technology would have several implications or possible extensions. If a corresponding data table is paired with a chart, finding what part of the graph is being highlighted could also highlight the corresponding data within the data table.

The way this was approached was to divide charts into maxima, minima, and slopes. They classified strokes as either parallel or roughly orthogonal. They assumed parallel strokes would generally highlight slopes and orthogonal strokes would generally highlight entire peaks or valleys.

Discussion:

I feel like the concept as it is is very limited in application. As it stands, it only works with line graphs and the researchers themselves said that they needed to conduct more research to see if their research was effective. I would like to see the concept extended to apply to general annotations because I feel like this would aid in school lectures especially when having to draw attention to minute details with in complex and intricate schematic such as a certain part within a circuit diagram.

Tuesday, February 2, 2010

The Design of Everyday Things

Starting with the beginning chapter of this book I was a bit skeptical.

"When you have trouble with things--whether it's figuring out whether to push or pull a door...it's not your fault. Don't blame yourself: blame the designer."

Personally, I can understand the occasional mishap where you pull where you should push, but to make a general assumption like "When you have trouble with things...blame the designer" is a bit drastic. There will always be at least a small learning curve for every device including doorknobs and light switches. There will always be someone distracted enough to not make it through that learning curve, so there will always be someone on which blame can be attributed.

As for the rest of the book, the author brought up very interesting aspects of the design of everyday things and he achieved his goal that I will not see the design of everyday things the same way. However, I don't believe he needed to be so verbose about it. I appreciated the thought that went into the psychology behind making mistakes, but I felt like the first third of the book could have been condensed into 2 pages.

Overall, I did enjoy this book despite its verboseness. Like I said, the author did accomplish the goals he set forth in the beginning. I definitely see design a little differently and will probably stop to think about optimal design of an object before trying to understand its functions completely.

As for the rest of the book, the author brought up very interesting aspects of the design of everyday things and he achieved his goal that I will not see the design of everyday things the same way. However, I don't believe he needed to be so verbose about it. I appreciated the thought that went into the psychology behind making mistakes, but I felt like the first third of the book could have been condensed into 2 pages.

Overall, I did enjoy this book despite its verboseness. Like I said, the author did accomplish the goals he set forth in the beginning. I definitely see design a little differently and will probably stop to think about optimal design of an object before trying to understand its functions completely.

Monday, February 1, 2010

Ethnography: Efficient Parking Lot

My idea for an ethnography is to study parking lot behaviors among the students that park in Lot 50. After gathering lots of data on the way in which a parking lot fills its spaces I'd like to be able to answer the following questions:

- Is it more time saving to park at the far end or wait for a spot to open up?

- If it depends, then when are the best times to wait for a spot and when are the best times to just suck it up and park at the end?

- What is the average time it takes a really good parking spot to be reoccupied?

- In what order or pattern are the parking spots taken?

- How aggressive are drivers when it comes to getting a good parking spot?

Tuesday, January 26, 2010

Virtual Shelves: Interactions with Orientation Aware Devices

Mobile devices can require several keystokes to utilize an application based on the context of the application. This requires the user to visually engage the display even when it would be beneficial to not have your eyes preoccupied with the device such as using a phone while driving. Virtual Shelves is an attempt at alleviating this problem by using the user's spatial awareness and gestures as input to a device, thereby not requiring visual interaction with a device. A hemisphere of options directly infront of the user can be activated by moving your arm into specified quadrants.

Two experiments were done to test the efficacy of Virtual Shelves:

- Measuring Kinesthetic Accuracy - studying how adept people inherently are at landing in a specific quadrant given the approximate theta and phi coordinates

- Efficacy of Virtual Shelves - The hemisphere infront of the user was divided into regions whose size was based on the general accuracy obtained from experiment 1(+/-15 degrees). Each region was assigned an arbitrary application and when the user hits the center button on their cellphone when in a certain region it activates that application without the user having to navigate menus with the D-pad. The efficacy results were compared with navigation using the D-pad.

The results showed that past the initial training use, people were able to access applications faster using Virtual Shelves.

Discussion:

The author mentions this setback, but it does require your cellphone to utilize motion capture in order for Virtual Shelves to function. Also, motions needed to utilize Virtual Shelves are gross motor skills as opposed to the fine motor skills needed to navigate menus using a D-pad. This could be socially awkward for user, especially in a crowded environment. People generally do not like drawing attention to themselves in order to use everyday devices. Virtual shelves, however, makes one look like they're guiding in an airplane when they just want to launch their mobile device's music player. I think this could be a serious short coming of Virtual Shelves but could be minimized if the user didn't have to outstretch their arm to select a region.

Disappearing Mobile Devices

Summary:

Mobile devices have seen a trend of growing smaller and smaller as technology permits. This can be seen in the progression from notebook computers and PDA's to interactive watches and rings. However, the limiting factor seems not to be the size of the circuitry, but rather the size of the physical user interface. The paper explored the possibilities and limitations of "disappearing mobile devices" which do not have a large physical user interface, but recognize gestures across it's surface as input.

When devices are miniaturized, several physical aspects become impractical. For example, capacitive touch pads become touch sensors when miniaturized. Also, pressure sensing becomes skewed at small scales especially when the sensor is on human skin because pressure sensors generally require placement on hard surfaces. Motion sensing using two techniques were implemented for the user study: EdgeWrite, where the shapes of letters are modified to minimize multidirectional(circles, arcs, etc) strokes, and Graffiti, where letters are still done in single strokes but are more natural and therefore rely on relative positions.

EdgeWrite

Graffiti

The user study found that EdgeWrite results on a disappearing mobile device was competitive with larger devices, but the error rate for Graffiti nearly doubled and may not be practical. Based on the findings of the study, they found that miniaturization could still yield meaningful interaction even on such a small scale.

Discussion:

I think the idea of mobile devices becoming smaller and smaller is a very popular idea, but I don't really believe it's the future of electronics. Especially within the cell phone market, we only temporarily saw a trend toward smaller phones. Instead the idea of smart phones was embraced more readily. Rather than phones continuing the trend of becoming smaller, we've seen them grow more powerful and subsequently larger. However, computers seem to be following the trend of growing smaller. With the advent of the netbook, portability seems to be increasingly more attractive. I do not however foresee micro-laptops the size of a dime being the future. Rather, I see a collision between phones and computers being the future with some device bridging the gap between smartphones and netbooks.

Enabling Always-Available Input with Muscle-Computer Interfaces

Summary:

Our fingers allow us to be one of the most dexterous creatures on the planet, allowing us to interact with our environment in very intricate ways. However, one limitation that we've come to experience is that when interacting with computer devices we must always use a physical control as a medium such as a keyboard, mouse, joystick, etc. In this paper, the concept of utilizing an EMG muscle-sensing band around the forearm to determine finger movements is explored for the purpose of potentially interacting with devices without the need to physically touch the device. One example is using an mp3 player while running. It does not matter where the mp3 player is placed on the body, the player is incredibly inconvenient to manipulate while moving often causing the user to stop running to change a song or increase the volume. Therefore, it was proposed that the user, wearing the muscle-sensing band, could make a series of gestures that correspond to certain functions of the mp3 player. This would allow the user to keep their hands free or at least free to do something else.

The user study was carried out in three parts, each testing to see whether or not certain gestures could be recognized with differing grips:

- small or no object in hand

- tool in hand

- heavy load in hand.

The results yielded a 79% accuracy while not holding anything, a 85% accuracy while holding a travel mug, and an 88% accuracy while carrying a weighted bag.

I feel like this concept could easily be extrapolated to other areas as well. If the accuracy of the EMG devices could be enhanced then sign language could be translated to text incredibly easy or prosthetic limbs could be more easily removed if the muscle sensors were external rather than embedded. I understand that the user study was specified for a very particular set of gestures and grips, but I definitely agree with the author's statement that thorough and robust testing could yield much more significant results.

So, I may have chosen to read this paper because it's incredibly close to my senior design project which I thought was convenient.

Thursday, January 21, 2010